Phân loại bối cảnh âm thanh dựa trên gated recurrent neural network

Tóm tắt - Phân loại bối cảnh âm thanh đã nhận được sự chú ý

trong nhiều năm qua. Đó là sự nhận dạng môi trường xung quanh

với sự hỗ trợ của âm thanh nền. Bài báo này đề xuất ba hệ thống

cho việc phân loại dựa trên Gated Recurrent Neural Network. Một

hệ thống gồm hai phần chính là trích xuất đặc trưng và phân loại.

Đối với trích xuất đặc trưng, chúng tôi sử dụng thuật toán Melfrequency cepstral coefficients (MFCC), những đặc trưng này sẽ là

dữ liệu vào của quá trình phân loại sau đó. Đối với quá trình phân

loại, chúng tôi sử dụng phương pháp Gated Recurrent Neural

Network bao gồm hai thuật toán chính là Long short-term memory

và Gated recurrent unit. Chúng tôi thử nghiệm các hệ thống đề xuất

trên tập dữ liệu LITIS Rouen bao gồm 19 danh mục và có độ dài

1.500 phút. Tỷ lệ phân loại cao nhất dựa trên hệ thống đề xuất của

chúng tôi là 94,92%. Đây là một tỷ lệ khá cao trong phân loại bối

cảnh âm thanh và cao hơn 3,0% khi so sánh với bài báo gốc.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Tóm tắt nội dung tài liệu: Phân loại bối cảnh âm thanh dựa trên gated recurrent neural network

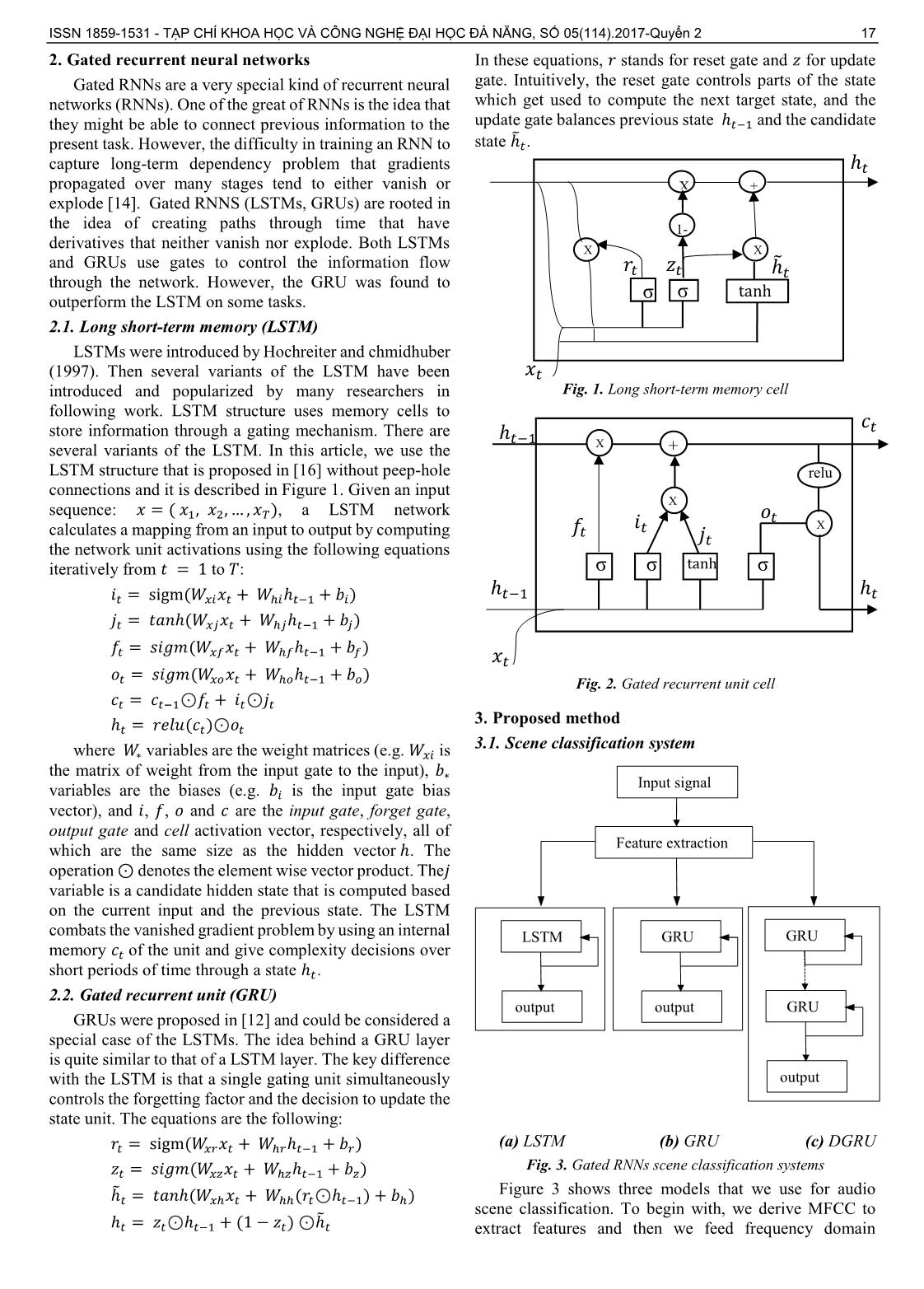

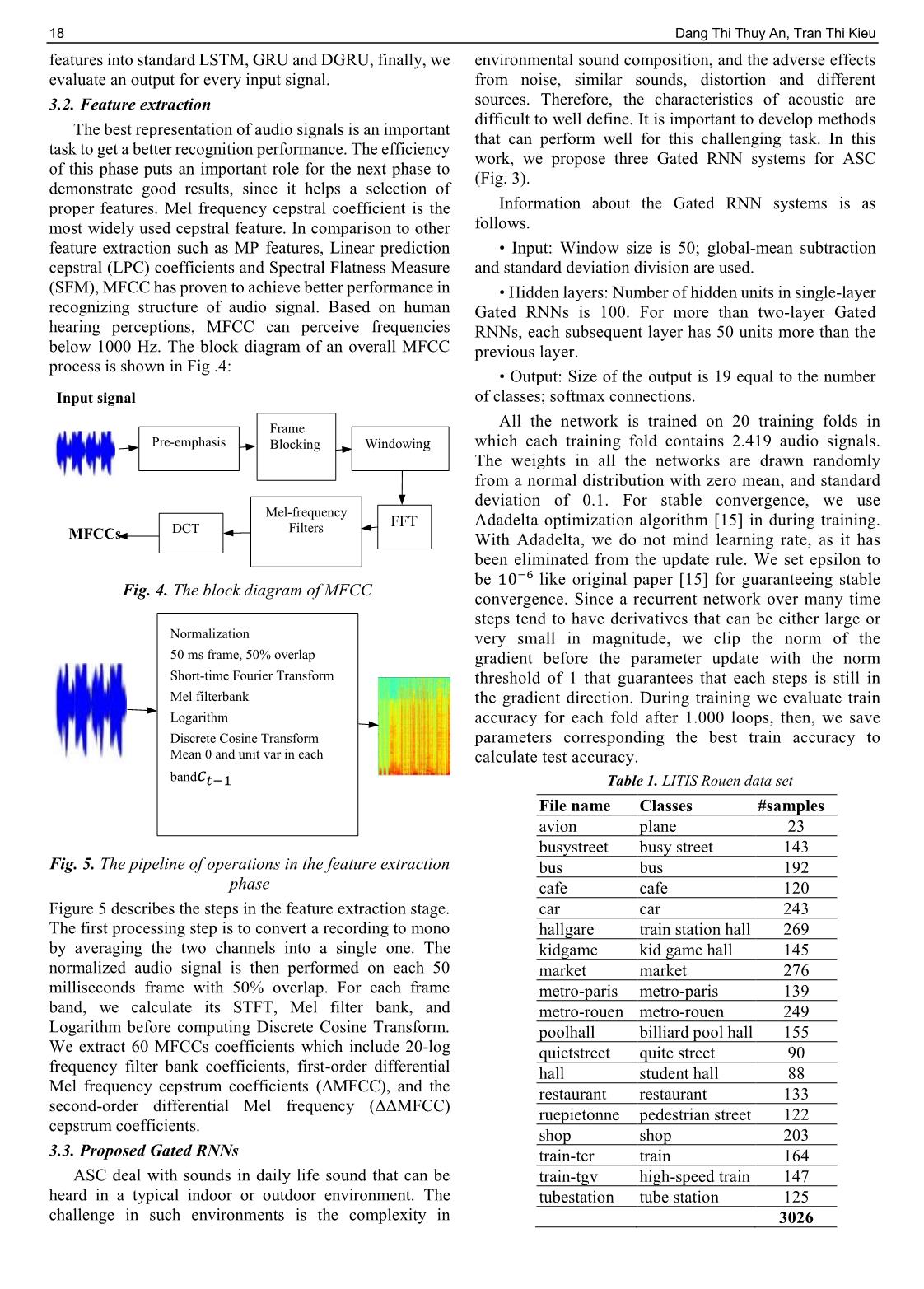

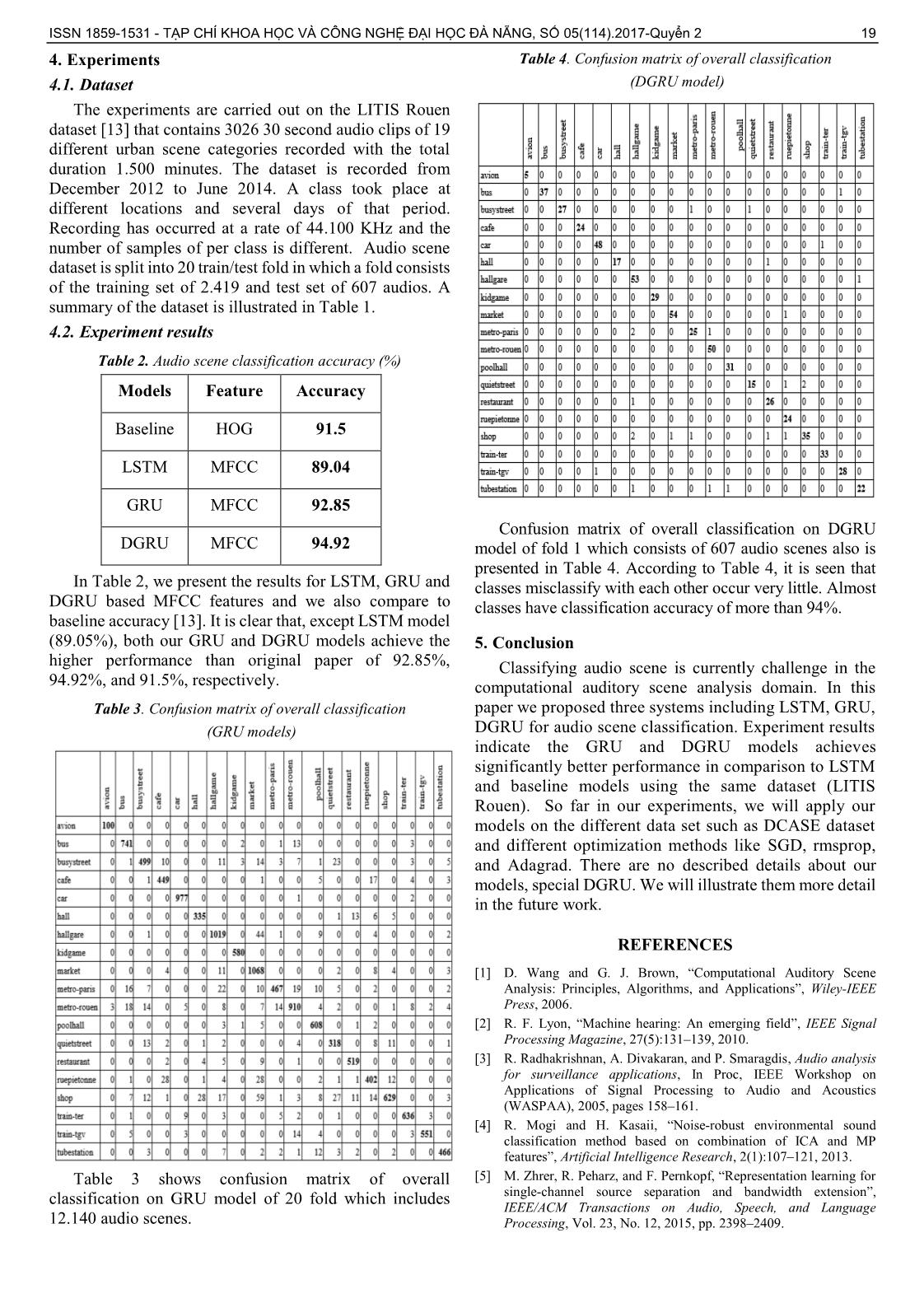

16 Dang Thi Thuy An, Tran Thi Kieu AUDIO SCENE CLASSIFICATION USING GATED RECURRENT NEURAL NETWORK PHÂN LOẠI BỐI CẢNH ÂM THANH DỰA TRÊN GATED RECURRENT NEURAL NETWORK Dang Thi Thuy An, Tran Thi Kieu College of Information Technology, The University of Danang; dttan@cit.udn.vn, ttkieu@cit.udn.vn Tóm tắt - Phân loại bối cảnh âm thanh đã nhận được sự chú ý trong nhiều năm qua. Đó là sự nhận dạng môi trường xung quanh với sự hỗ trợ của âm thanh nền. Bài báo này đề xuất ba hệ thống cho việc phân loại dựa trên Gated Recurrent Neural Network. Một hệ thống gồm hai phần chính là trích xuất đặc trưng và phân loại. Đối với trích xuất đặc trưng, chúng tôi sử dụng thuật toán Mel- frequency cepstral coefficients (MFCC), những đặc trưng này sẽ là dữ liệu vào của quá trình phân loại sau đó. Đối với quá trình phân loại, chúng tôi sử dụng phương pháp Gated Recurrent Neural Network bao gồm hai thuật toán chính là Long short-term memory và Gated recurrent unit. Chúng tôi thử nghiệm các hệ thống đề xuất trên tập dữ liệu LITIS Rouen bao gồm 19 danh mục và có độ dài 1.500 phút. Tỷ lệ phân loại cao nhất dựa trên hệ thống đề xuất của chúng tôi là 94,92%. Đây là một tỷ lệ khá cao trong phân loại bối cảnh âm thanh và cao hơn 3,0% khi so sánh với bài báo gốc. Abstract - Audio Scene Classification has received more attention in the past few years. It is the recognition of surrounding environment with the support of the background sound. This paper proposes a system for classifying audio scene that is based on Gated Recurrent Neural Network. The system includes two main parts - feature extraction and classification. In feature extraction part Mel-frequency cepstral coefficients (MFCC) are extracted; these features, then, are used for classifying by Gated RNNs which consist of long short-term memory (LSTM) and networks based on the gated recurrent unit (GRU). Experimental results are conducted on LITIS Rouen dataset which includes 19 classes and contains about 1,500 minutes of audio scene recordings. Our proposed system achieves the best recognition accuracy of 94.92%, which is quite high in audio scene classification and about 3.0% higher compared to the baseline system. Từ khóa - audio scene classification; MFCC; GRNNs; LSTM; GRU. Key words - audio scene classification; MFCC; GRNNs; LSTM; GRU. 1. Introduction Audio signals play an essential role in many applications, such as audio scene classification, home automation, multimedia indexing, and robot navigation. In recent years, audio scene classification has receives more and more attentions since it is an important problem of computational auditory scene analysis (CASA) [1, 2]. Audio scene analysis is a challenging machine learning task, and solving this problem plays an important role so that a device can recognize a surrounding environment via the sound it captures. Audio scene classification is a complex problem since an audio scene related to a given location can appear a wide variety of single sound events while only a few of them provide some information about the scene. Hence, it is challenging to obtain a good representation and performance for classification. Over the last few years, a number of feature extraction methods have been proposed for related problem, such as speech recognition and audio event classification, for example Gammatone filters, Histogram of Oriented Gradients (HOG) and Gabor dictionaries [4]. One of the most prominent features that have been used to characterize an audio scene is mel- frequency cepstral coefficients (MFCC). MFCC features have demonstrated good performance in speech and music applications. These features extracted from a time frequency (TF) representation, they essentially capture non-linear information about the power spectrum of the signal. Following the success of MFCC, in this paper we also choose MFCC as a feature extraction method. The choice of the classifiers also holds an important role in an audio scene recognition system. Early works in Audio Scene Classification, Gaussian mixture models (GMMs), hidden Markov models (HMMs), and Support vector machines (SVM) are commonly applied for classification. In recent years, deep neural networks (DNNs) has demonstrated its superiority when applied to audio data, for instance [5, 6, 7]. Additionally, the problems of automatic environmental sound recognition - known as acoustic scene classification (ASC) has received more and more attention from the research community in the last few years. One of the well-known challenges of ASC recently is DCASE 2016 challenge [8] which gained great attention from researchers. In most of classification approaches researchers have used in this challenge, deep neural networks represents the state-of-the-art [9, 10]. Motivated by the great success of DNNs for processing sequential data, in this paper we are favor of gated recurrent neural networks [11, 12] for audio scene classification. GRNNs include the long short-term memory (LSTM) and networks based on the gated recurrent unit (GRU). We evaluate gated RNNs on LITIS Rouen dataset [13]. The goal of this work is to investigate the applicability of Gated RNNs model to ASC and to compare their performance with the baseline [13]. In addition, we will compare performance on different models which we propose in this paper. The paper is organized as follows. Firstly, we introduce Gated RNNs in Section 2 and present the proposed system in Section 3. We then illustrate the dataset used in our evaluation and show the result in Section 4. Finally, we draw a conclusion in Section 5. ISSN 1859-1531 - TẠP CHÍ KHOA HỌC VÀ CÔNG NGHỆ ĐẠI HỌC ĐÀ NẴNG, SỐ 05(114).2017-Quyển 2 17 2. Gated recurrent neural networks Gated RNNs are a very special kind of recurrent neural networks (RNNs). One of the great of RNNs is the idea that they might be able to connect previous information to the present task. However, the difficulty in training an RNN to capture long-term dependency problem that gradients propagated over many stages tend to either vanish or explode [14]. Gated RNNS (LSTMs, GRUs) are rooted in the idea of creating paths through time that have derivatives that neither vanish nor explode. Both LSTMs and GRUs use gates to control the information flow through the network. However, the GRU was found to outperform the LSTM on some tasks. 2.1. Long short-term memory (LSTM) LSTMs were introduced by Hochreiter and chmidhuber (1997). Then several variants of the LSTM have been introduced and popularized by many researchers in following work. LSTM structure uses memory cells to store information through a gating mechanism. There are several variants of the LSTM. In this article, we use the LSTM structure that is proposed in [16] without peep-hole connections and it is described in Figure 1. Given an input sequence: 𝑥 = ( 𝑥1, 𝑥2, , 𝑥𝑇), a LSTM network calculates a mapping from an input to output by computing the network unit activations using the following equations iteratively from 𝑡 = 1 to 𝑇: 𝑖𝑡 = sigm (𝑊𝑥𝑖𝑥𝑡 + 𝑊ℎ𝑖ℎ𝑡−1 + 𝑏𝑖) 𝑗𝑡 = 𝑡𝑎𝑛ℎ(𝑊𝑥𝑗𝑥𝑡 + 𝑊ℎ𝑗ℎ𝑡−1 + 𝑏𝑗) 𝑓𝑡 = 𝑠𝑖𝑔𝑚(𝑊𝑥𝑓𝑥𝑡 + 𝑊ℎ𝑓ℎ𝑡−1 + 𝑏𝑓) 𝑜𝑡 = 𝑠𝑖𝑔𝑚(𝑊𝑥𝑜𝑥𝑡 + 𝑊ℎ𝑜ℎ𝑡−1 + 𝑏𝑜) 𝑐𝑡 = 𝑐𝑡−1⊙𝑓𝑡 + 𝑖𝑡⊙𝑗𝑡 ℎ𝑡 = 𝑟𝑒𝑙𝑢(𝑐𝑡)⊙𝑜𝑡 where 𝑊∗ variables are the weight matrices (e.g. 𝑊𝑥𝑖 is the matrix of weight from the input gate to the input), 𝑏∗ variables are the biases (e.g. 𝑏𝑖 is the input gate bias vector), and 𝑖, 𝑓, 𝑜 and 𝑐 are the input gate, forget gate, output gate and cell activation vector, respectively, all of which are the same size as the hidden vector ℎ. The operation ⊙ denotes the element wise vector product. The𝑗 variable is a candidate hidden state that is computed based on the current input and the previous state. The LSTM combats the vanished gradient problem by using an internal memory 𝑐𝑡 of the unit and give complexity decisions over short periods of time through a state ℎ𝑡. 2.2. Gated recurrent unit (GRU) GRUs were proposed in [12] and could be considered a special case of the LSTMs. The idea behind a GRU layer is quite similar to that of a LSTM layer. The key difference with the LSTM is that a single gating unit simultaneously controls the forgetting factor and the decision to update the state unit. The equations are the following: 𝑟𝑡 = sigm (𝑊𝑥𝑟𝑥𝑡 + 𝑊ℎ𝑟ℎ𝑡−1 + 𝑏𝑟) 𝑧𝑡 = 𝑠𝑖𝑔𝑚(𝑊𝑥𝑧𝑥𝑡 + 𝑊ℎ𝑧ℎ𝑡−1 + 𝑏𝑧) ℎ̃𝑡 = 𝑡𝑎𝑛ℎ(𝑊𝑥ℎ𝑥𝑡 + 𝑊ℎℎ(𝑟𝑡⊙ℎ𝑡−1) + 𝑏ℎ) ℎ𝑡 = 𝑧𝑡⊙ℎ𝑡−1 + (1 − 𝑧𝑡) ⊙ℎ̃𝑡 In these equations, 𝑟 stands for reset gate and 𝑧 for update gate. Intuitively, the reset gate controls parts of the state which get used to compute the next target state, and the update gate balances previous state ℎ𝑡−1 and the candidate state ℎ̃𝑡. Fig. 1. Long short-term memory cell Fig. 2. Gated recurrent unit cell 3. Proposed method 3.1. Scene classification system (a) LSTM (b) GRU (c) DGRU Fig. 3. Gated RNNs scene classification systems Figure 3 shows three models that we use for audio scene classification. To begin with, we derive MFCC to extract features and then we feed frequency domain 𝑧𝑡 𝑟𝑡 X X 1- σ σ tanh X 𝑥𝑡 ℎ̃𝑡 ℎ𝑡 + LSTM output GRU output Input signal Feature extraction GRU output GRU ℎ𝑡−1 𝑜𝑡 𝑗𝑡 𝑖𝑡 X + X σ σ tanh σ relu X ℎ𝑡−1 𝑓𝑡 𝑥𝑡 ℎ𝑡 𝑐𝑡 18 Dang Thi Thuy An, Tran Thi Kieu features into standard LSTM, GRU and DGRU, finally, we evaluate an output for every input signal. 3.2. Feature extraction The best representation of audio signals is an important task to get a better recognition performance. The efficiency of this phase puts an important role for the next phase to demonstrate good results, since it helps a selection of proper features. Mel frequency cepstral coefficient is the most widely used cepstral feature. In comparison to other feature extraction such as MP features, Linear prediction cepstral (LPC) coefficients and Spectral Flatness Measure (SFM), MFCC has proven to achieve better performance in recognizing structure of audio signal. Based on human hearing perceptions, MFCC can perceive frequencies below 1000 Hz. The block diagram of an overall MFCC process is shown in Fig .4: Fig. 4. The block diagram of MFCC Fig. 5. The pipeline of operations in the feature extraction phase Figure 5 describes the steps in the feature extraction stage. The first processing step is to convert a recording to mono by averaging the two channels into a single one. The normalized audio signal is then performed on each 50 milliseconds frame with 50% overlap. For each frame band, we calculate its STFT, Mel filter bank, and Logarithm before computing Discrete Cosine Transform. We extract 60 MFCCs coefficients which include 20-log frequency filter bank coefficients, first-order differential Mel frequency cepstrum coefficients (ΔMFCC), and the second-order differential Mel frequency (ΔΔMFCC) cepstrum coefficients. 3.3. Proposed Gated RNNs ASC deal with sounds in daily life sound that can be heard in a typical indoor or outdoor environment. The challenge in such environments is the complexity in environmental sound composition, and the adverse effects from noise, similar sounds, distortion and different sources. Therefore, the characteristics of acoustic are difficult to well define. It is important to develop methods that can perform well for this challenging task. In this work, we propose three Gated RNN systems for ASC (Fig. 3). Information about the Gated RNN systems is as follows. • Input: Window size is 50; global-mean subtraction and standard deviation division are used. • Hidden layers: Number of hidden units in single-layer Gated RNNs is 100. For more than two-layer Gated RNNs, each subsequent layer has 50 units more than the previous layer. • Output: Size of the output is 19 equal to the number of classes; softmax connections. All the network is trained on 20 training folds in which each training fold contains 2.419 audio signals. The weights in all the networks are drawn randomly from a normal distribution with zero mean, and standard deviation of 0.1. For stable convergence, we use Adadelta optimization algorithm [15] in during training. With Adadelta, we do not mind learning rate, as it has been eliminated from the update rule. We set epsilon to be 10−6 like original paper [15] for guaranteeing stable convergence. Since a recurrent network over many time steps tend to have derivatives that can be either large or very small in magnitude, we clip the norm of the gradient before the parameter update with the norm threshold of 1 that guarantees that each steps is still in the gradient direction. During training we evaluate train accuracy for each fold after 1.000 loops, then, we save parameters corresponding the best train accuracy to calculate test accuracy. Table 1. LITIS Rouen data set File name Classes #samples avion plane 23 busystreet busy street 143 bus bus 192 cafe cafe 120 car car 243 hallgare train station hall 269 kidgame kid game hall 145 market market 276 metro-paris metro-paris 139 metro-rouen metro-rouen 249 poolhall billiard pool hall 155 quietstreet quite street 90 hall student hall 88 restaurant restaurant 133 ruepietonne pedestrian street 122 shop shop 203 train-ter train 164 train-tgv high-speed train 147 tubestation tube station 125 3026 Input signal Pre-emphasis Frame Blocking Windowing FFT Mel-frequency Filters DCT MFCCs Normalization 50 ms frame, 50% overlap Short-time Fourier Transform Mel filterbank Logarithm Discrete Cosine Transform Mean 0 and unit var in each band𝑐𝑡−1 ISSN 1859-1531 - TẠP CHÍ KHOA HỌC VÀ CÔNG NGHỆ ĐẠI HỌC ĐÀ NẴNG, SỐ 05(114).2017-Quyển 2 19 4. Experiments 4.1. Dataset The experiments are carried out on the LITIS Rouen dataset [13] that contains 3026 30 second audio clips of 19 different urban scene categories recorded with the total duration 1.500 minutes. The dataset is recorded from December 2012 to June 2014. A class took place at different locations and several days of that period. Recording has occurred at a rate of 44.100 KHz and the number of samples of per class is different. Audio scene dataset is split into 20 train/test fold in which a fold consists of the training set of 2.419 and test set of 607 audios. A summary of the dataset is illustrated in Table 1. 4.2. Experiment results Table 2. Audio scene classification accuracy (%) Models Feature Accuracy Baseline HOG 91.5 LSTM MFCC 89.04 GRU MFCC 92.85 DGRU MFCC 94.92 In Table 2, we present the results for LSTM, GRU and DGRU based MFCC features and we also compare to baseline accuracy [13]. It is clear that, except LSTM model (89.05%), both our GRU and DGRU models achieve the higher performance than original paper of 92.85%, 94.92%, and 91.5%, respectively. Table 3. Confusion matrix of overall classification (GRU models) Table 3 shows confusion matrix of overall classification on GRU model of 20 fold which includes 12.140 audio scenes. Table 4. Confusion matrix of overall classification (DGRU model) Confusion matrix of overall classification on DGRU model of fold 1 which consists of 607 audio scenes also is presented in Table 4. According to Table 4, it is seen that classes misclassify with each other occur very little. Almost classes have classification accuracy of more than 94%. 5. Conclusion Classifying audio scene is currently challenge in the computational auditory scene analysis domain. In this paper we proposed three systems including LSTM, GRU, DGRU for audio scene classification. Experiment results indicate the GRU and DGRU models achieves significantly better performance in comparison to LSTM and baseline models using the same dataset (LITIS Rouen). So far in our experiments, we will apply our models on the different data set such as DCASE dataset and different optimization methods like SGD, rmsprop, and Adagrad. There are no described details about our models, special DGRU. We will illustrate them more detail in the future work. REFERENCES [1] D. Wang and G. J. Brown, “Computational Auditory Scene Analysis: Principles, Algorithms, and Applications”, Wiley-IEEE Press, 2006. [2] R. F. Lyon, “Machine hearing: An emerging field”, IEEE Signal Processing Magazine, 27(5):131–139, 2010. [3] R. Radhakrishnan, A. Divakaran, and P. Smaragdis, Audio analysis for surveillance applications, In Proc, IEEE Workshop on Applications of Signal Processing to Audio and Acoustics (WASPAA), 2005, pages 158–161. [4] R. Mogi and H. Kasaii, “Noise-robust environmental sound classification method based on combination of ICA and MP features”, Artificial Intelligence Research, 2(1):107–121, 2013. [5] M. Zhrer, R. Peharz, and F. Pernkopf, “Representation learning for single-channel source separation and bandwidth extension”, IEEE/ACM Transactions on Audio, Speech, and Language Processing, Vol. 23, No. 12, 2015, pp. 2398–2409. 20 Dang Thi Thuy An, Tran Thi Kieu [6] E. Cakir, T. Heittola, H. Huttunen, and T. Virtanen, “Multilabel vs. combined single-label sound event detection with deep neural networks”, in 23rd European Signal Processing Conference 2015 (EUSIPCO 2015), 2015. [7] E. Cakir, T. Heittola, H.Huttunen, and T.Virtanen, “Polyphonic sound event detection using multi label deep neural networks”, in 2015 International Joint Conference on Neural Networks (IJCNN), 2015, pp. 1–7. [8] [9] Hamid Eghbal-Zadeh, Bernhard Lehner, Matthias Dorfer, Gerhard Widmer, “A hybrid approach using binaural i-vectors and deep convolutional neural networks”, Detection and Classification of Acoustic Scenes and Events 2016, Tech. Rep., 2016. [10] M. Zohrer and F. Pernkopf, “Gated recurrent networks applied to acoustic scene classification and acoustic event detection”, IEEE AASP Challenge: Detection and Classification of Acoustic Scenes and Events, Sep. 2016. [11] J. Chung, C¸ .Gulc¸ehre, K. Cho, and Y. Bengio, “Empirical evaluation of gated recurrent neural networks on sequence modeling”, CoRR, Vol. abs/1412.3555, 2014. [12] J. Chung, C. Gulcehre, K. Cho, and Y. Bengio, “Gated feedback recurrent neural networks”, CoRR, Vol. abs/1502.02367, 2015. [13] A. Rakotomamonjy and G. Gasso, “Histogram of gradients of time- frequency representations for audio scene classification”, IEEE/ACM Trans. Audio, Speech, and Language Processing, 23(1):142–153, 2015. [14] Bengio, Y., Simard, P., and Frasconi, P, (1994), “Learning long-term dependencies with gradient descent is difficult”, IEEE Transactions on Neural Networks, 5(2), 157–166. [15] Matthew D. Zeile, Adadelta: An adptive learning rate method. (The Board of Editors received the paper on 08/05/2017, its review was completed on 24/05/2017)

File đính kèm:

phan_loai_boi_canh_am_thanh_dua_tren_gated_recurrent_neural.pdf

phan_loai_boi_canh_am_thanh_dua_tren_gated_recurrent_neural.pdf