Pattern discovering for ontology based activity recognition in multi-resident homes

Activity recognition is one of the preliminary steps in designing and implementing

assistive services in smart homes. Such services help identify abnormality or

automate events generated while occupants do as well as intend to do their

desired Activities of Daily Living (ADLs) inside a smart home environment.

However, most existing systems are applied for single-resident homes. Multiple

people living together create additional complexity in modeling numbers of

overlapping and concurrent activities. In this paper, we introduce a hybrid

mechanism between ontology-based and unsupervised machine learning

strategies in creating activity models used for activity recognition in the context of

multi-resident homes. Comparing to related data-driven approaches, the

proposed technique is technically and practically scalable to real-world scenarios

due to fast training time and easy implementation. An average activity recognition

rate of 95.83% on CASAS Spring dataset was achieved and the average

recognition run time per operation was measured as 12.86 mili-seconds.

Keywords: Activity recognition, multi-resident homes, ontology–based approaches

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Trang 8

Trang 9

Trang 10

Tải về để xem bản đầy đủ

Tóm tắt nội dung tài liệu: Pattern discovering for ontology based activity recognition in multi-resident homes

Duy Nguyen, Son Nguyen– Volume 2 – Issue 4-2020, p. 332-347.

332

Pattern Discovering for Ontology Based Activity Recognition

in Multi-resident Homes

by Duy Nguyen (Thu Dau Mot University), Son Nguyen (Vietnam National University-

Ho Chi Minh)

Article Info: Received 20 Sep 2020, Accepted 6 Nov 2020, Available online 15 Dec, 2020

Corresponding author: duynk@tdmu.edu.vn

https://doi.org/10.37550/tdmu.EJS/2020.04.079

ABSTRACT

Activity recognition is one of the preliminary steps in designing and implementing

assistive services in smart homes. Such services help identify abnormality or

automate events generated while occupants do as well as intend to do their

desired Activities of Daily Living (ADLs) inside a smart home environment.

However, most existing systems are applied for single-resident homes. Multiple

people living together create additional complexity in modeling numbers of

overlapping and concurrent activities. In this paper, we introduce a hybrid

mechanism between ontology-based and unsupervised machine learning

strategies in creating activity models used for activity recognition in the context of

multi-resident homes. Comparing to related data-driven approaches, the

proposed technique is technically and practically scalable to real-world scenarios

due to fast training time and easy implementation. An average activity recognition

rate of 95.83% on CASAS Spring dataset was achieved and the average

recognition run time per operation was measured as 12.86 mili-seconds.

Keywords: Activity recognition, multi-resident homes, ontology–based approaches

1. Introduction

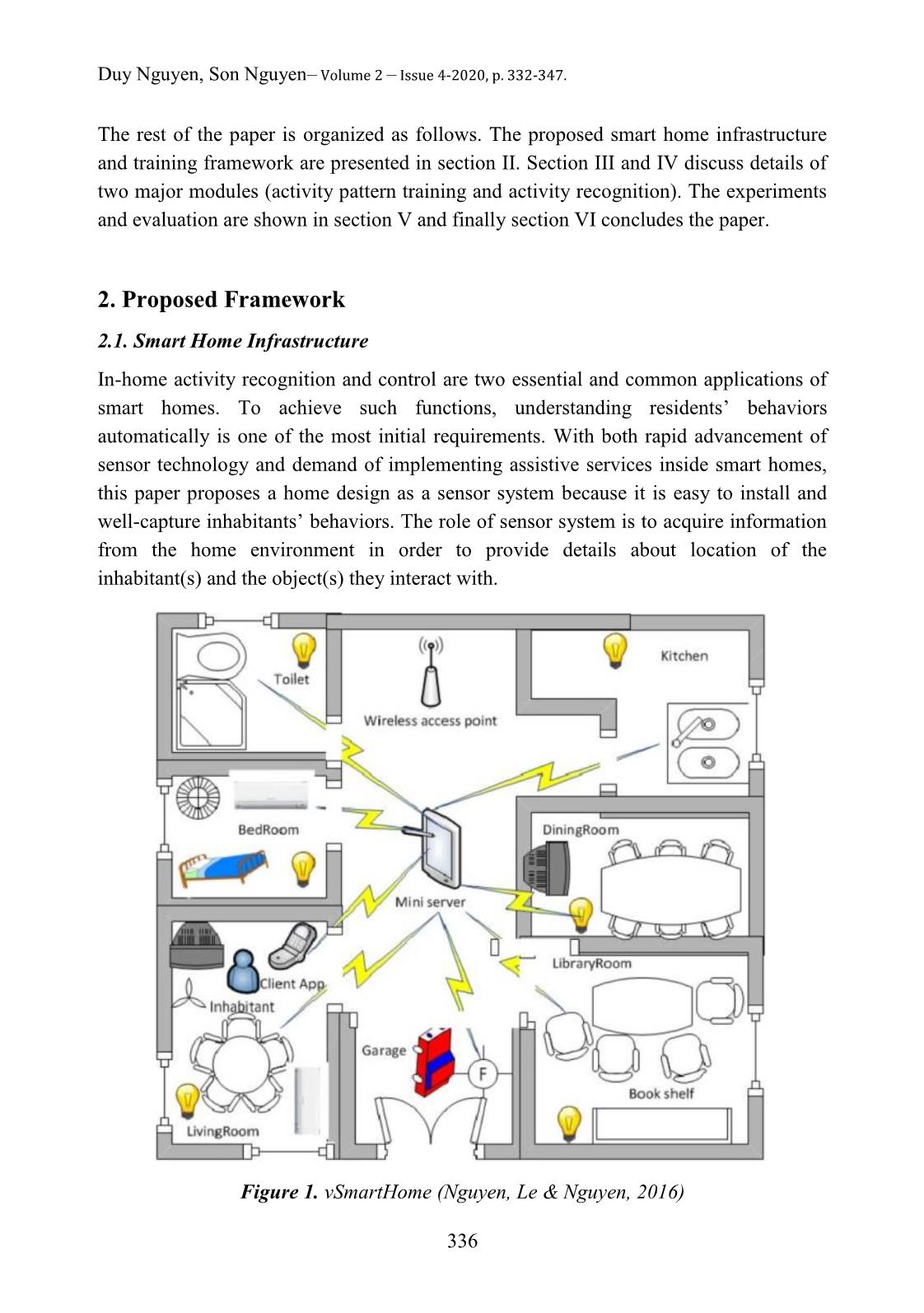

Smart home is a kind of pervasive environments which the integration of hardware and

information technology into a normal home is to achieve following goals: safety,

Thu Dau Mot University Journal of Science – Volume 2 – Issue 4-2020

333

comfort and sometimes entertainment. Activity of Daily Living (ADL) and Instrument

ADL (IADL) become fundamental activities inside smart homes. In smart homes used

for healthcare, the ability to perform such kinds of activities is considered as an

essential criterion to access the condition of patients and elderly citizens. Therefore,

recognizing ADLs and IADLs continuously become an important preliminary step in

systems providing assistive services as well as help detect early symptoms of diseases,

provide exact medical history to physicians, etc (Emi & Stankovic, 2015).

Activity recognition is a key part in every assistive system inside a smart home and is

built by finding or training the system on occupants’ behaviors. After training, the

activity models created can be used for assistive and automation functions such as

activity detection, prediction or decision making, etc Learning behavioral patterns of

the occupant is essential in creating such effective models.

Information on ADLs used for learning comes from many sources such as data from

previous observations or from domain experts, text corpus and web services in specific

cases (Chen et al., 2012a; Atallah & Yang, 2009). Observations for training activity

models include video and audio devices as well as wearable, RFID or object based

sensors. Large research work is being carried out using video and audio devices, but it

has the limitation of violating the privacy of the occupants (Chen et al., 2012a). While

wearable sensors are reported to be uncomfortable for inhabitants and difficult to

implement in scalable systems, RFID and object based sensors can be efficiently

utilized to continuously report about residents’ activities and environment status. Hence

our research focus is toward sensor based activity recognition which training data is

collected from these kinds of sensors.

Sensor based activity recognition is categorized as data driven and knowledge driven

based on modeling techniques. Data driven approaches analyze the data collected from

previous observations in the smart home environment. And then machine learning

techniques are used to build activity models from sensor datasets. Such data could either

annotate or unlabeled. Supervised learning technique (Chen et al., 2012a; Augusto et al.,

2010) required labeled dataset for effective modeling, while unsupervised or semi

supervised techniques used unlabeled data for the training process. Clustering (Lotfi et al.,

2012) or pattern clustering (Rashidi et al., 2011) is two unsupervised approaches of

activity recognition applied for few existing systems on smart homes. In many

circumstances, unlabeled dataset is preferred for activity modeling in smart homes due to

excessive labeling overhead and data error possibility. Two concerns of data driven

approaches are ―cold start problem‖ and ―re-usability‖. The smart home system needs

enough time to get a huge collection of previous sensor data to accurately model the

occupant behavior. However, the activity models creat ...

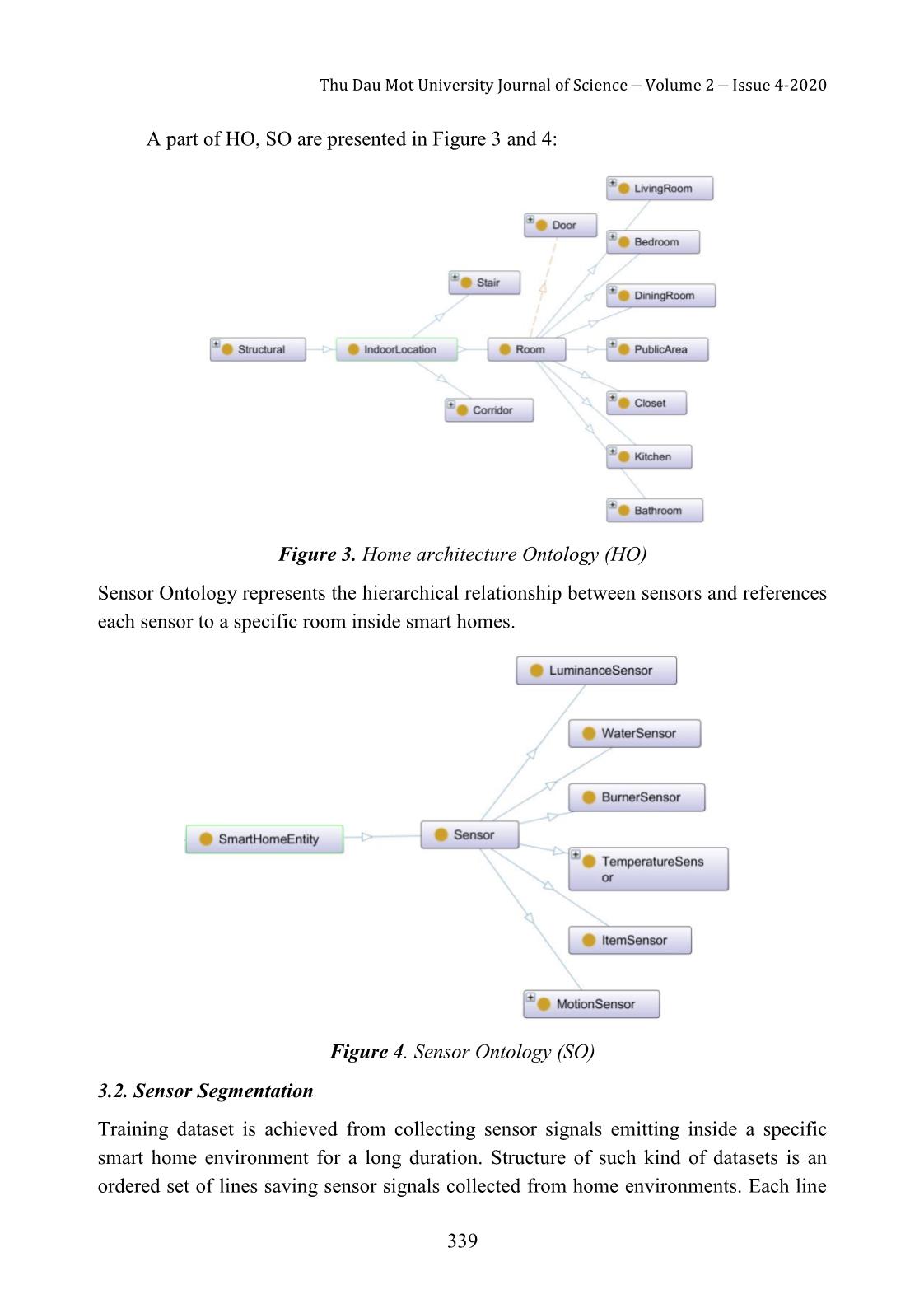

- Start and end time of each activity;

- HO and SO of the house

2. Output:

3. A = {}

activities = {}

4. for each { timei, sensorIdi, sensorValuei} in E do

5. locationi = room where sensorIdi is located

6. if ({ timei, sensorIdi, sensorValuei} is starting event of an activity) then

7. Create a new activity: newActivity

8. newActivity.startTime = timei;

Thu Dau Mot University Journal of Science – Volume 2 – Issue 4-2020

341

9. Add sensor event {sensorIdi, sensorValuei} to the newActivity’s sensor event list

10. Add newActivity to activities

11. else if ({ timei, sensorIdi, sensorValuei} is ending event of an activity) then

12. currentActivity = get activity that is performed in locationi from activities;

13. Add sensor event {sensorIdi, sensorValuei} to the currentActivity sensor event list

(currentActivity.sensors)

14. Add currentActivity to A

15. Else if (activities has an activity in locationi) then

16. currentActivity = get activity that is performed in locationi from activities;

17. Add sensor event {sensorIdi, sensorValuei} to the currentActivity sensor event list

(currentActivity.sensors)

18. end if

19. end for

20. return A;

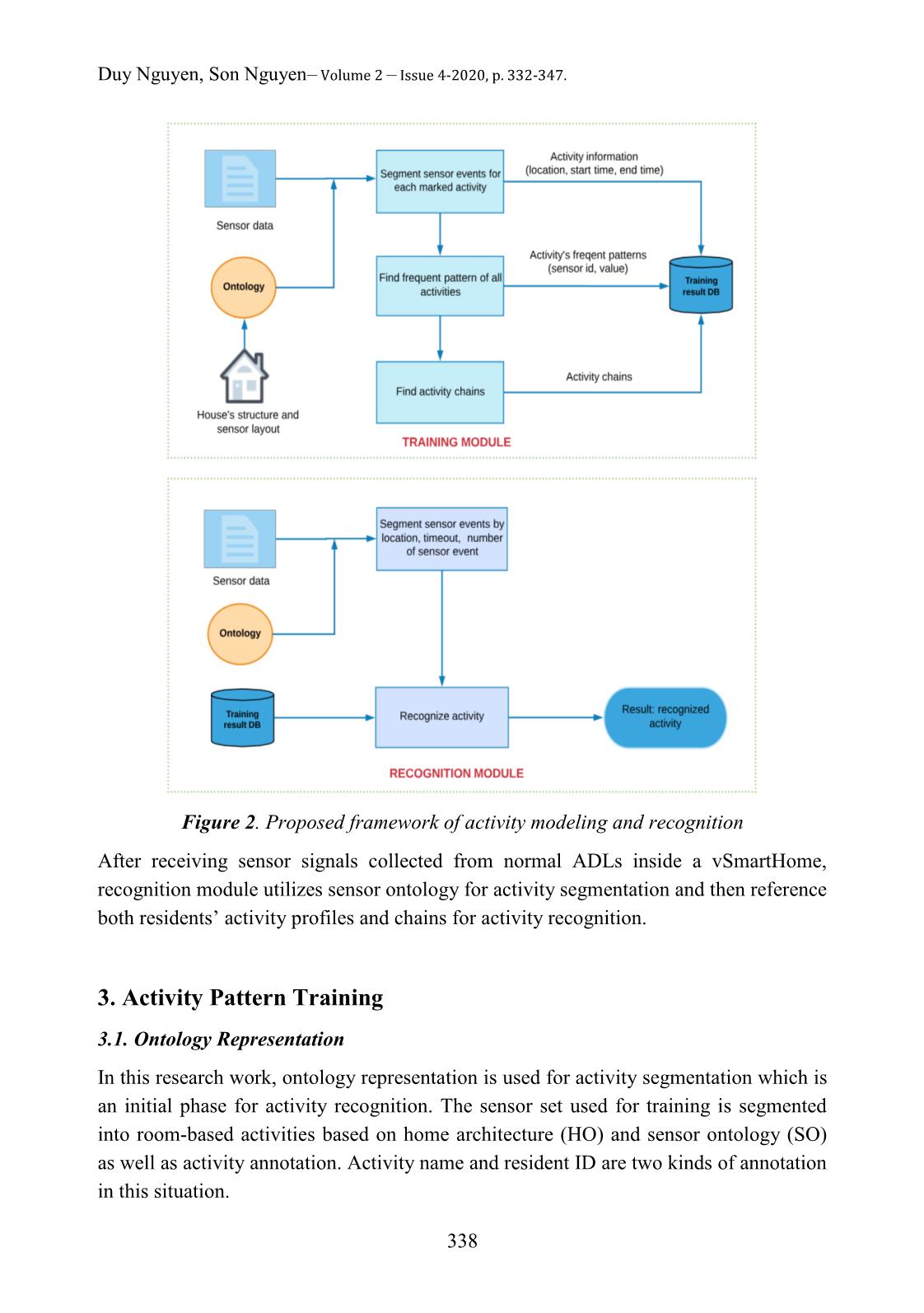

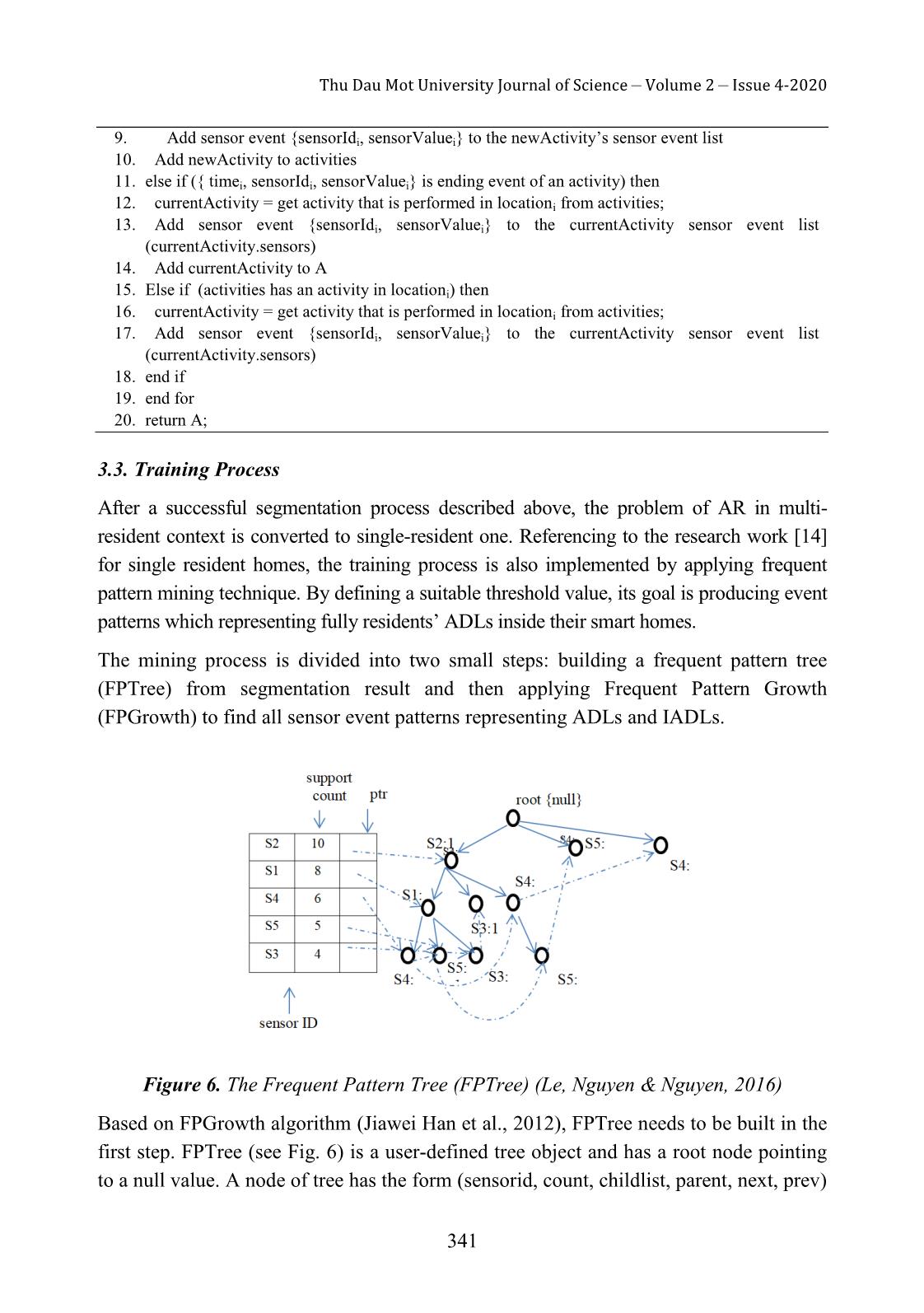

3.3. Training Process

After a successful segmentation process described above, the problem of AR in multi-

resident context is converted to single-resident one. Referencing to the research work [14]

for single resident homes, the training process is also implemented by applying frequent

pattern mining technique. By defining a suitable threshold value, its goal is producing event

patterns which representing fully residents’ ADLs inside their smart homes.

The mining process is divided into two small steps: building a frequent pattern tree

(FPTree) from segmentation result and then applying Frequent Pattern Growth

(FPGrowth) to find all sensor event patterns representing ADLs and IADLs.

Figure 6. The Frequent Pattern Tree (FPTree) (Le, Nguyen & Nguyen, 2016)

Based on FPGrowth algorithm (Jiawei Han et al., 2012), FPTree needs to be built in the

first step. FPTree (see Fig. 6) is a user-defined tree object and has a root node pointing

to a null value. A node of tree has the form (sensorid, count, childlist, parent, next, prev)

Duy Nguyen, Son Nguyen– Volume 2 – Issue 4-2020, p. 332-347.

342

where sensorid is the unique id of each sensor, count is the number of times the sensor

broadcasts signals into home environment, childlist is the list of its child nodes, parent

point to its parent node, next and prev are the pointers pointing to other tree nodes

having the same sensor id on the FPTree.

Mining results are sensor event patterns which not only define contextual description of

ADLs but also differentiate behaviors of each resident by forming personal activity

profiles and summarizing activity chains done by an inhabitant on a daily basis.

4. Recognition Mechanism

When the mini server receives sensor signals, it will segment the sensor sequence based

on home architecture and sensor ontologies. Segmenting helps to recognize concurrent

activities taking place at different rooms at the same time and performing by different

residents living inside a smart home. If these sensor segments have enough a defined

number of sensor events or exceed a duration timeout, the system will utilize sensor

events inside each segment for activity recognition. This mechanism helps to recognize

continuously ADLs, even when a resident finish the previous activity and do another

one at the same room.

In general, recognition process contains two stages: 1) Sensor Segmentation; 2) Activity

Recognition

4.1. Sensor Segmentation

The input data are sensor event sequences produced inside smart home

environment, while each event may come from different locations and rooms. It

compares location of each sensor with the current room to decide for addition or new

segment creation. Besides, during segmentation process the system also tests conditions

for activity recognition. The process is depicted in the below algorithm:

Algorithm 2 Segmentation of sensor events

1. Input:

- sensorEvents: list of sensor events

- blockTime: maximum time of a segment

- maxSensorNumber: maximum sensor events of a segment

2. Output:

Segment of sensor events in a location that is input for activity recognition phase

3. Create a list:

activityThreads = {};

4. For each sensorEvent in sensorEvents then

sensorLocation = room where sensorIdi is located (query from ontology O)

5. If activityThreads has sensor event list that located in sensorLocation then

Thu Dau Mot University Journal of Science – Volume 2 – Issue 4-2020

343

6. sensorEventList = get sensor event list in currentlocation from activityThreads

7. Add sensorEvent to sensorEventList

8. If sensorEventList.size >= maxSensorNumber OR sensorEventList.lastEventDate -

sensorEventList.firstEventDate >= blockTime then

9. remove sensorEventList from activityThreads and start recognize activity of this sensor event list

10. End if;

11. Else new newSensorEventList, Add sensorEvent to newSensorEventList

12. Add newSensorEventList to activityThreads;

13. end if

14. end for

4.2. Activity Recognition

Sensor segments are used as input data for activity recognition. At the first stage,

the system compares segment content with event patterns saved inside activity clusters

or residents’ activity profiles. Activities having higher match level will be used as

results for recognition process. Besides, activity chains summarized after event pattern

clustering are further used to increase the exact level of recognition results.

Based on such chains, the system has ability to predict possible activities which

might take place after the recognized activity.

The algorithm below is depicted in details for this process:

Algorithm 3 Recognize activity

1. Input:

- Training result:

- activityPatterns: list of activities and sensor event patterns.

- activityChains: list of activity chain created by algorithm 2

- traningActivityList: list of all performed activities in training data

- sensorEvents: list of sensor event in a same location that is segmented in the algorithm 2

- location = location of sensorEvents

2. Output:

- Activity of the sensor event list

- Possible activity chain

3. Create:

//List of activity and match point of the activity with the sensorEvents

activityMatchingList = {};

4. Filter out elements in activityPatterns, traningActivityList and activityChains by time and

location to reduce the process time

5. For each activityPattern in activityPatterns then

6. Calculate matchPoint of sensorEvents based on activityPattern and

traningActivityList

Add activityPattern.activityName and matchPoint to activityMatchingList;

7. End for;

8. Sort activityMatchingList by matchPoint descending, filter out elements that low matchPoint

Duy Nguyen, Son Nguyen– Volume 2 – Issue 4-2020, p. 332-347.

344

9. Group same elements in activityChains and sort by appearances descending

10. For each activityMatching in activityMatchingList then

11. For each activityChain in activityChains then

12. If activityChain includes activityMatching then

Print activity information: name, location, and possible activity chain;

13. end if

14. end for

15. end for

5. Experiments And Evaluation

The efficiency of the proposed approach lies in two elements: fast training time and

easy implementation on a normal home with many rooms and more than one resident

occupied. The approach lies between knowledge-based and data-driven techniques. It

decreases at least the dependence of domain knowledge provided by experts. Besides,

performing ADLs may change by time due to resident’s habit or behavior changes.

Utilizing just kind of knowledge will make the smart home less flexible and slower for

adaptation. In addition, using home architecture and sensor ontologies helps to segment

sensor sequences easier coming from concurrent activities in the context of multi-

resident homes.

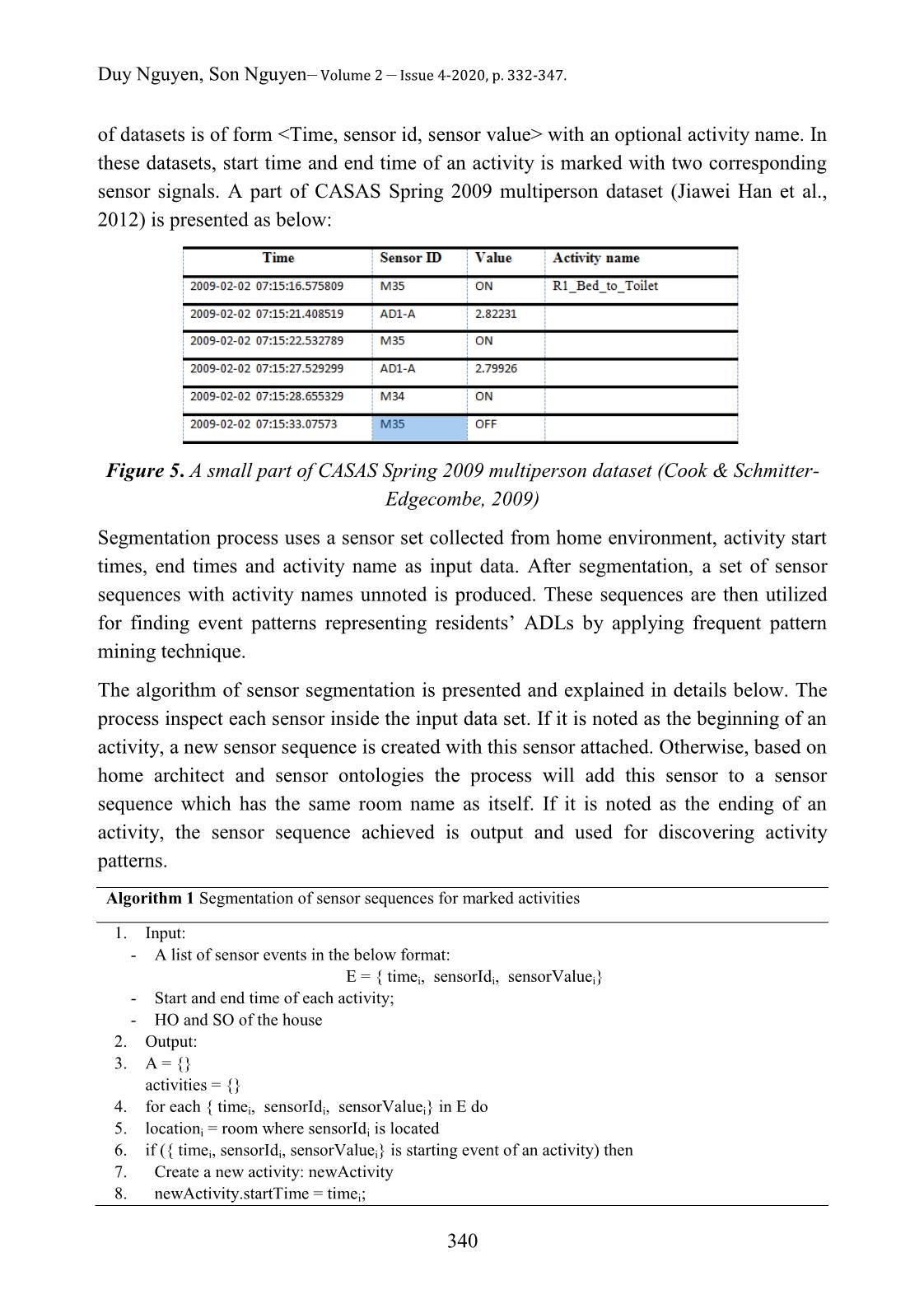

5.1. Experiments

There are few available multi-user datasets that are well annotated in the smart home

community. After a careful selection, we choose a public dataset from the CASAS

Smart Home project (Cook & Schmitter-Edgecombe, 2009) used for experiments. In

this paper, we included performance results of the proposed approach in the ―CASAS

Spring 2009 multiperson dataset‖ (see Figure 7). In this dataset, data was collected from

a two-story apartment that housed two residents and they performed their normal daily

activities. The ground floor includes kitchen, two small rooms and stairs. The second

floor includes two bedrooms, one toilet and an empty room. The dataset annotates

several ADLs such as sleeping, personal hygiene, preparing meal, work, study and

watching TV. Seventy-two sensors are deployed in the house including motion, item,

door/contact and temperature sensors (Emi & Stankovic, 2015).

The two-month dataset is divided into 2 parts: 1 month and 22 days used for training

and the rest of 10 days for recognition.

5.2. Evaluation

Training duration on the computer running Windows 10 Pro with CPU Intel Core i3-

8100 (6M Cache, 3,60 GHz) and RAM 8GB is 13.485ms.

Thu Dau Mot University Journal of Science – Volume 2 – Issue 4-2020

345

Figure 7. CASAS Spring Sensor Deployment (Cook & Schmitter-Edgecombe, 2009)

Accuracy percentage of activity recognition is measured for 4 ADLs and presented in

the below table:

TABLE 1. Accuracy percentage of the proposed system

Activity Name Number of sensor segments Accuracy

Work 99 97.98%

Preparing meal 67 97.01%

Sleeping 335 99.403%

Personal hygiene 107 87.06%

Accuracy average 95.83%

The proposed approach is proved to have the same accuracy rating with the SARRIMA

system (Emi & Stankovic, 2015), while it is technically and practically scalable to real-

world scenarios due to fast training time and easier in implementation. Home

architecture and sensor network of a smart home are known in advance. In multi-resident

context with many concurrent activities, two corresponding kinds of ontology help to

segment sensor streams into inhabitants’ activity instances easier. Besides, experiment

results show that duration of each activity recognition is measured about 1ms. It is fast

enough for implementing real-time activity recognition.

6. Conclusion

Smart home offers assistive services through modeling activity recognition system. The

proposed approach of activity modeling and recognition combines strong points of

knowledge-based and data-driven techniques. In this work, we just use ontologies to

Duy Nguyen, Son Nguyen– Volume 2 – Issue 4-2020, p. 332-347.

346

segment sensor sequences for solving the problem of concurrent activities in multi-

resident context. Then we apply pattern mining technique for modeling activities. Such

models produced are proved more flexible and adaptable to behavior changes of residents

as well as depend at least to experts’ knowledge. Residents’ habit or behaviors always

changes by time due to many factors. Therefore, letting the smart home system more and

more flexible to changes is very important. After a defined duration, the system needs

ability of refreshing activity models by re-training. In the future, we will implement the

proposed system in other smart home environments and looking for re-training conditions

necessary to deploy a real smart home system efficiently for a long time.

References

Atallah, L., Yang, G.-Z. (2009). The use of pervasive sensing for behavior profiling—a survey.

Pervasive Mob. Comput. 5(5), 447–464.

Augusto, J.C., Nakashima, H., Aghajan, H. (2010). Ambient intelligence and smart

environments: a state of the art. In: Handbook of Ambient Intelligence and Smart

Environments, 3–31.

Aztiria, A., Izaguirre, A., Augusto, J.C. (2010). Learning patterns in ambient intelligence

environments: a survey. Artif. Intell. Rev. 34(1), 35–51. Springer, Netherlands.

Chen, L., Hoey, J., Nugent, C.D., Cook, D.J., Zhiwen, Y. (2012a). Sensor-based activity

recognition. IEEE Trans. Syst. Man Cybern. Part C 42(6), 790–808.

Chen, L., Hoey, J., Nugent, C.D., Cook, D.J., Zhiwen, Y. (2012b). Sensor-based activity

recognition. IEEE Trans. Syst. Man Cybern. Part C 42(6), 790–808

Chen, L., Nugent, C.D., Wang, H. (2012c). A knowledge-driven approach to activity

recognition in smart homes. IEEE Trans. Knowl. Data Eng. 24(6), 961–974.

D Nguyen, T Le, S Nguyen (2016). A Novel Approach to Clustering Activities within Sensor

Smart Homes. The International Journal of Simulation Systems, Science & Technology.

D. J. Cook, M. Schmitter-Edgecombe (2009). Assessing the quality of activities in a smart

environment. Methods Inf Med, 48(5),480–485.

G Okeyo, L Chen, H Wang, R Sterritt (2010). Ontology-enabled activity learning and model

evolution in smart home. The International Conference on Ubiquitous Intelligence and

Computing, pp. 67-82.

IA Emi, JA Stankovic (2015). SARRIMA: a smart ADL recognizer and resident identifier in

multi-resident accommodations. In Proceedings of the Conference on Wireless Health

(Bethesda, Maryland — October 14 - 16, 2015). ISBN: 978-1-4503-3851-6

J Ye, G Stevenson, S Dobson (2015). KCAR: A knowledge-driven approach for concurrent

activity recognition. Pervasive and Mobile Computing (May 2015), vol 15, 47-70.

Jiawei Han, Micheline Kamber, and Jian Pei (2012), Mining Frequent Patterns, Associations

and Correlations: Basic Concepts and Methods, in Data Mining: Concepts and Techniques,

3rd edition, 243 – 278.

Thu Dau Mot University Journal of Science – Volume 2 – Issue 4-2020

347

KS Gayathri, KS Easwarakumar, S Elias (2017). Contextual Pattern Clustering for Ontology

Based Activity Recognition in Smart Home. The International Conference on Intelligent

Information Technologies (17 December 2017)

KS Gayathri, S Elias, S Shivashankar (2014). An Ontology and Pattern Clustering Approach for

Activity Recognition in Smart Environments. In Proceedings of Advances in Intelligent

Systems and Computing (04 March 2014)

Lotfi, A., Langensiepen, C.S., Mahmoud, S.M., Akhlaghinia, M.J. 2012: Smart homes for the

elderly dementia sufferers: identification and prediction of abnormal behaviour. J. Ambient

Intell. Humaniz. Comput. 3(3), 205–218

Rashidi, P., Cook, D.J., Holder, L.B., Schmitter-Edgecombe, M. J (2011): Discovering activities

to recognize and track in a smart environment. IEEE Trans. Knowl. Data Eng. 23(4), 527–

539.

T Le, D Nguyen, S Nguyen (2016). An approach of using in-home contexts for activity

recognition and forecast. In Proceedings of the 2nd International Conference on Control,

Automation and Robotics, ISBN: 978-1-4673-8702-6, pp. 182-186

File đính kèm:

pattern_discovering_for_ontology_based_activity_recognition.pdf

pattern_discovering_for_ontology_based_activity_recognition.pdf