Viterbi algorithm for english - Vietnamese sentence retrieval in ebmt

An Example-Based Machine Translation (EBMT) system retrieves the translation examples

that are most similar to an input expression and adjusts the examples to obtain the translation. The

two most popular translation example unit is phrase and sentence. In phrase unit, the translations

of phrases are combined into a translation to form the input sentence. Although a phrase unit is

a suitable translation example unit in many case because of its generality for covering various

sentences, there is a risk of mixing errors or producing unnatural translations while combining

phrases into a sentence [8]. To deal with these risk, we use the translation example unit is a

sentence. In this kind of translation, a example similar enough to an input sentence as a whole

results in an accurate and natural translation of the input sentence. Because of its generality, an

EBMT system whose translation example unit is a sentence suffers from the problem of narrow

coverage because a sentence is a longer unit and its generality is lower. To achieve sufficiently

broad translation coverage by using sentence-wise EBMT, a large-scale parallel corpus must be

prepared and, therefore, an efficient method is needed to retrieve translation examples from a

large-scale corpus.

Trang 1

Trang 2

Trang 3

Trang 4

Trang 5

Trang 6

Trang 7

Tóm tắt nội dung tài liệu: Viterbi algorithm for english - Vietnamese sentence retrieval in ebmt

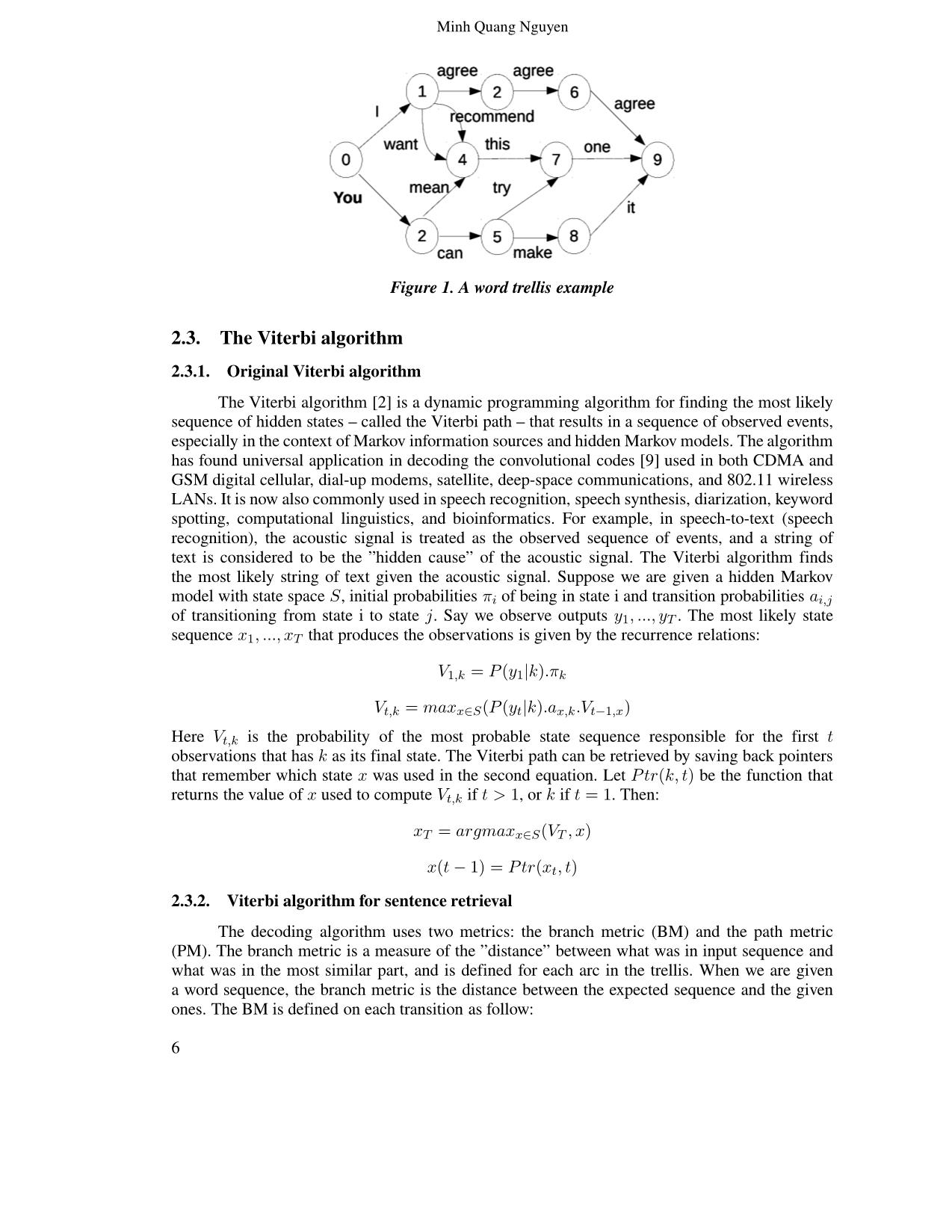

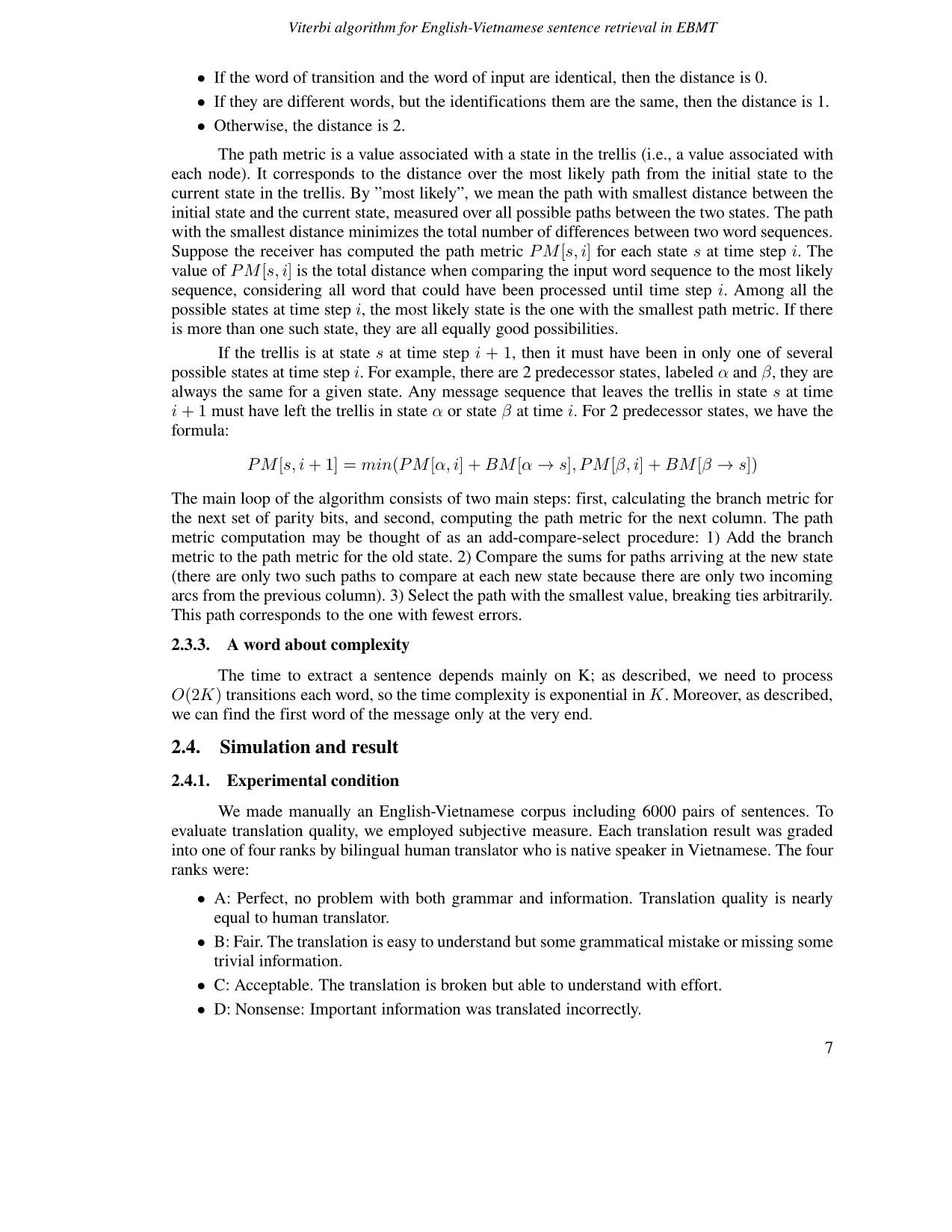

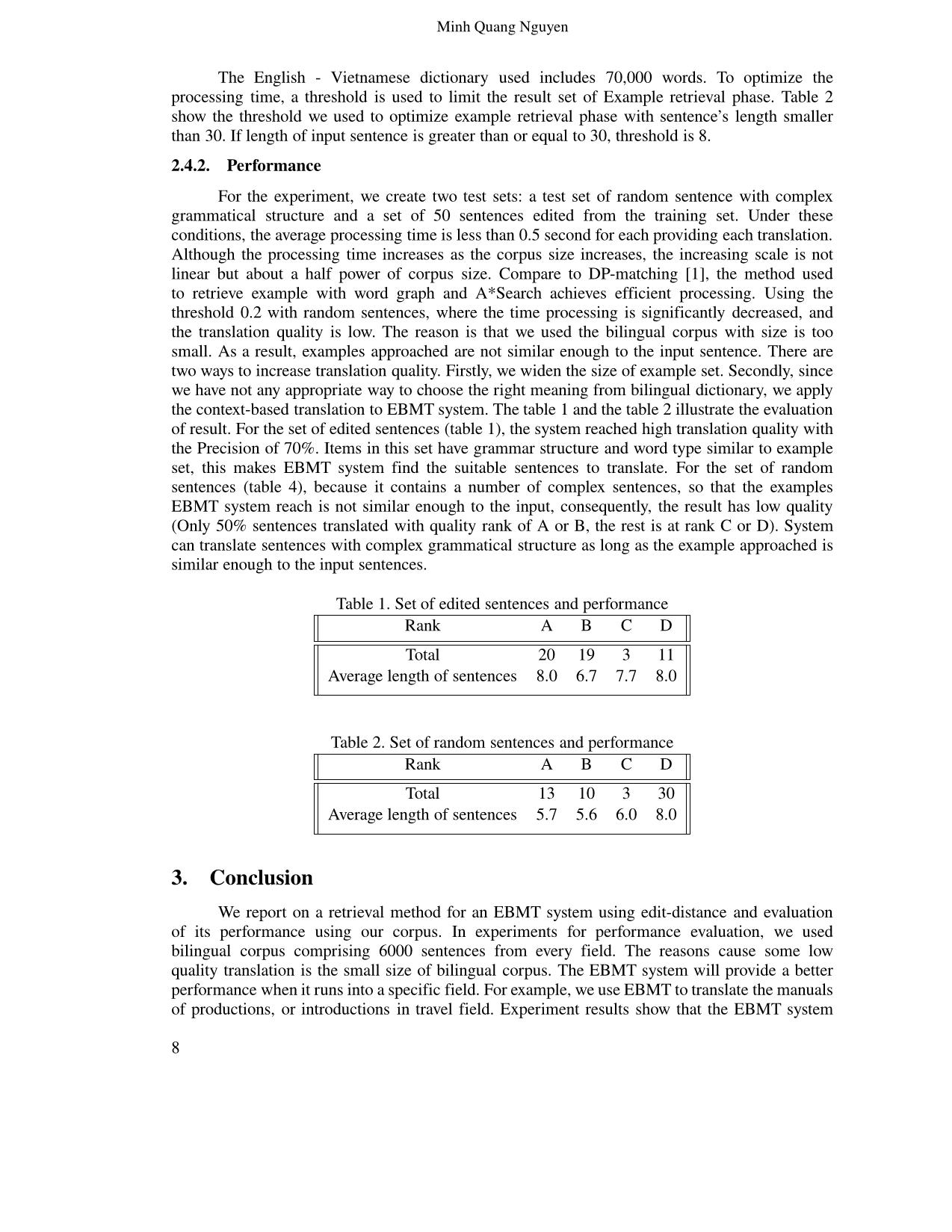

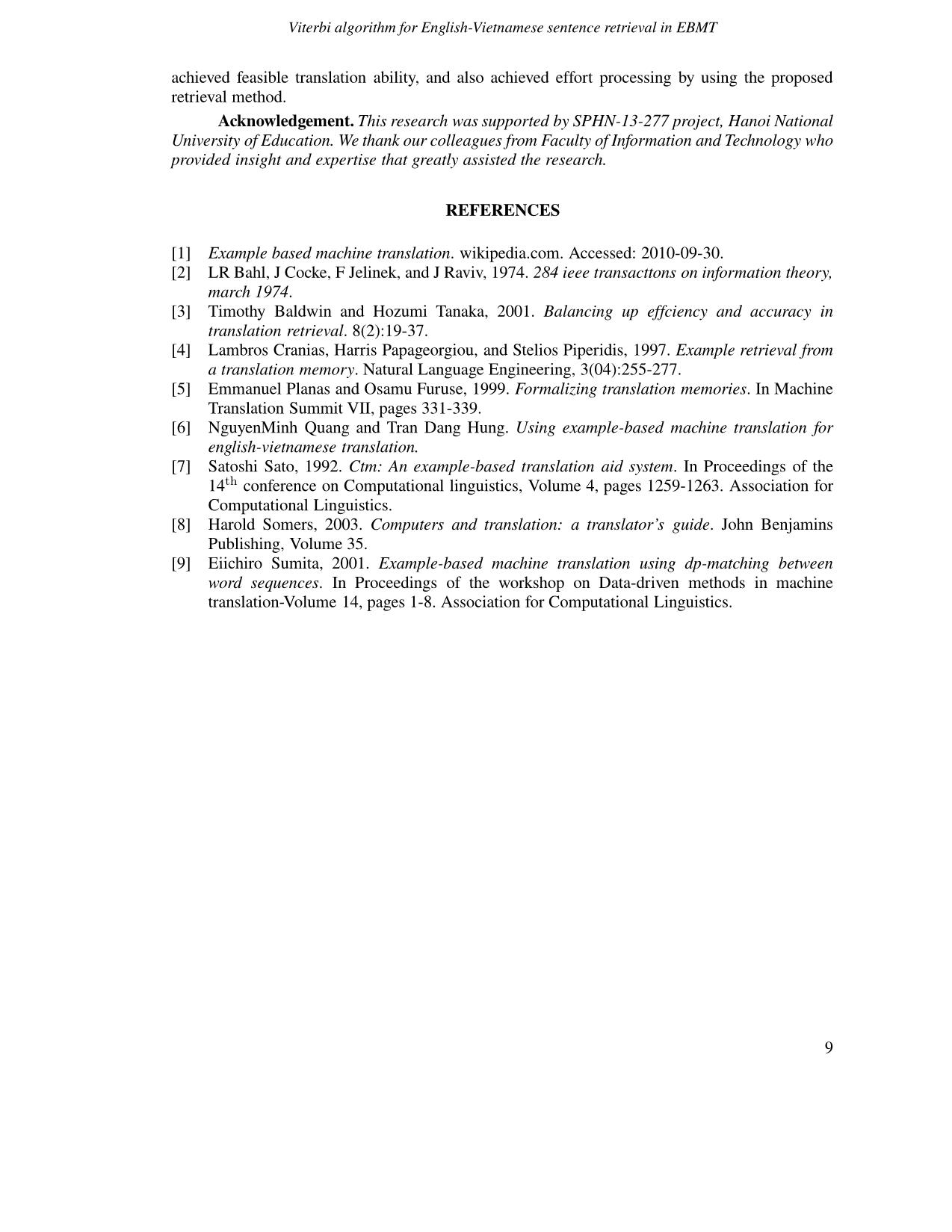

JOURNAL OF SCIENCE OF HNUE DOI: 10.18173/2354-1075.2015-0047 Educational Sci., 2015, Vol. 60, No. 7A, pp. 3-9 This paper is available online at VITERBI ALGORITHM FOR ENGLISH - VIETNAMESE SENTENCE RETRIEVAL IN EBMT Minh Quang Nguyen Software Engineering Department, Hanoi National University of Education Abstract. Example-based machine translation (EBMT) is a method of machine translation characterized by the use of a bilingual corpuswith parallel texts as the main knowledge base at run-time. In EBMT strategy, sentence retrieval is one of the most important modules. In this step, a dynamic programming algorithm or word-graph is used and from the bilingual corpus the most similar sentence is chosen for the next steps. In this paper, we propose an algorithm to pick a sentence from the corpus based on the Viterbi algorithm. The result shows that our proposedmethod, when applied to English – Vietnamese translation, attains acceptable quality. Keywords: Viterbi algorithm, example based translation, convolutional code, natural processing language, example retrieval. 1. Introduction An Example-Based Machine Translation (EBMT) system retrieves the translation examples that are most similar to an input expression and adjusts the examples to obtain the translation. The two most popular translation example unit is phrase and sentence. In phrase unit, the translations of phrases are combined into a translation to form the input sentence. Although a phrase unit is a suitable translation example unit in many case because of its generality for covering various sentences, there is a risk of mixing errors or producing unnatural translations while combining phrases into a sentence [8]. To deal with these risk, we use the translation example unit is a sentence. In this kind of translation, a example similar enough to an input sentence as a whole results in an accurate and natural translation of the input sentence. Because of its generality, an EBMT system whose translation example unit is a sentence suffers from the problem of narrow coverage because a sentence is a longer unit and its generality is lower. To achieve sufficiently broad translation coverage by using sentence-wise EBMT, a large-scale parallel corpus must be prepared and, therefore, an efficient method is needed to retrieve translation examples from a large-scale corpus. In this paper, we propose a retrieval method for a EBMT system, whose measure of similarity is edit-distance. An efficient retrieval method for EBMT has much in common with Translation Memory. Many work on Translation Memory (include: [7, 4, 5, 3]) concentrate on filtering algorithms for sentence sets and/or matching algorithms between two sentences. The methods adopting filtering algorithms, first, filter sentences out by using, for example, a clustering technique that applies word vectors. Then, for each of the sentences left as candidates, they repeat Ngày nhận bài: 15/7/2015. Ngày nhận đăng: 10/11/2015. Liên hệ: Minh Quang Nguyen, e-mail: quangnm@hnue.edu.vn 3 Minh Quang Nguyen the matching procedure between the two sentences, a candidate and the input. Unfortunately, these methods sometimes omit the most similar sentences in the filtering process. On the other hand, this paper proposes an efficient retrieval method without omissions, as a solution for the problem of searching for the sentences with the least edit distance among a corpus. This method does not repeat the matching procedure between two sentences, but proceeds to match the input sentence and sentences in a corpus concurrently, where the sentences are expressed as a graph. Our previous work [6] build an method applied for Vietnamese example retrieval using A*Search. The result of our algorithm is identical to that retrieved from edit distance method but with lower complexity. However, to apply it in practical system, we have to build a large number of graphs because of the limitation of each graph. In addition, we must rebuild a graph when a sentence is added to the corpus. In this paper, we propose a new method to pick an example from corpus using Viterbi algorithm. Output of our algorithm is a hybrid of sentence unit and phrase unit: part of sentence from the beginning which is similar to the input. Our simulation and result show that EBMT system can get a feasible quality translation with low complexity retrieval. 2. Content 2.1. The EBMT overview There are 3 components in a conventional example based system: • Matching Fragment Component. • Word Alignment. • Combination between the input and the example sentence carried out to provide the final target sentence. For example: 1. He buys a book on international politics 2. a. He buys a notebook. b. Anh ay mua mot quyen sach. 3. Anh ay mua mot quyen sach ve chinh tri the gioi. With the input sentence (1), the translation (3) can be provided by matching and adapting from (2a, b). One of the main advantages of this method is that, we can improve the quality of translation easily by widen the amount example set. The more items add, the better we have. It’s useful to apply for a specific field because the limit of form of the sentence included in these fields. For example, we use it to translate manuals of product, or weather forecast, or medical diagnosis. The difficulty to apply EBMT in Vietnamese is that, there’s no word-net in Vietnamese, so we promote some new solutions to this problem. We build a system with 3 steps: • Form the set of example sentences, the result is the set of graphs. • Carry out the most popular example sentence to the input sentence. From an input sentence, by using “edit distance” measuring, the system will find sentences which are the most similar to it. Edit distance is used for fast approximate between sentences, the smaller distance, and the greater similarity between sentences. • Adjust the gap between the example and the input. 4 Viterbi algorithm for English-Vietnamese sentence retrieval in EBMT 2.2. Data source and model In traditional strategy, there are two units can be used: sentence and phrase. If sentence unit is used, we have to find out the most similar sentence to input from the bilingual corpus. If phrase unit is used, input is divided into phrase, each phrase is translate independently by searching for its similar phrase from the corpus. In contrast, our approach try to find the part of a sentence that has similar grammar structure to the input and the translation would be done with this part. In particular, our EBMT should determine the “best possible” sequence of words from the beginning of each one in corpus. There are many ways of defining “best”, but one that is especially appealing is the most likely sequence of states that must have been traversed. 2.2.1. Data source description Bilingual corpus: this is the set of example sentences. This set includes pairs of sentences. Each sentence is performed as a word sequence. Spreading the size of the set will improve the quality of translation. The sentences are word sequences. We divide the words into 2 groups • Functional word: Functional words (or grammatical words or auto-semantic words) are words that have little lexical meaning or have ambiguous meaning, but instead serve to express grammatical relationships with other words within a sentence, or specify the attitude or mood of the speaker. • Content word: Words that are not function words are called content words (or open class words or lexical words): these include nouns, verbs, adjectives, and most adverbs, although some adverbs are function words (e.g., then and why). 2.2.2. Bilingual corpus model The trellis is a structure derived from the state machine that will allow us to develop an efficient way to decode convolutional codes. In this kind of code, decoder try to find the sequence of bit that has minimum distance from a input sequence. Similarly, we use trellis to find a part of sentence which is similar to input. Each column of the trellis has the set of states; each state in a column is connected to several states in the next column. The link from each state in a column of the trellis shows what gets transmitted on an identification of words as nouns, verbs, adjectives, adverbs, etc. The link from one state to other state show that, there is at least one sentence in the corpus that has transfer between these states by a word inside. The decoder needs to do several works in terms of this trellis. It gets a sequence of words, and needs to determine the best path through the trellis — that is, the sequence of states in the trellis that can explain the observed, and possibly corrupted, sequence of word. • Each node represent a state. • Each edge is corresponds to a word in a sentence. • Each sentence is corresponds to a path from start node. An example of word trellis is shown in Figure 1. The trellis describes 3 sentences: 1. I agree with you 2. I recommend this one 3. I want this one 4. You mean this one 5. You can make it 6. You can try one 5 Minh Quang Nguyen Figure 1. A word trellis example 2.3. The Viterbi algorithm 2.3.1. Original Viterbi algorithm The Viterbi algorithm [2] is a dynamic programming algorithm for finding the most likely sequence of hidden states – called the Viterbi path – that results in a sequence of observed events, especially in the context of Markov information sources and hidden Markov models. The algorithm has found universal application in decoding the convolutional codes [9] used in both CDMA and GSM digital cellular, dial-up modems, satellite, deep-space communications, and 802.11 wireless LANs. It is now also commonly used in speech recognition, speech synthesis, diarization, keyword spotting, computational linguistics, and bioinformatics. For example, in speech-to-text (speech recognition), the acoustic signal is treated as the observed sequence of events, and a string of text is considered to be the ”hidden cause” of the acoustic signal. The Viterbi algorithm finds the most likely string of text given the acoustic signal. Suppose we are given a hidden Markov model with state space S, initial probabilities πi of being in state i and transition probabilities ai,j of transitioning from state i to state j. Say we observe outputs y1, ..., yT . The most likely state sequence x1, ..., xT that produces the observations is given by the recurrence relations: V1,k = P (y1|k).πk Vt,k = maxx∈S(P (yt|k).ax,k.Vt−1,x) Here Vt,k is the probability of the most probable state sequence responsible for the first t observations that has k as its final state. The Viterbi path can be retrieved by saving back pointers that remember which state x was used in the second equation. Let Ptr(k, t) be the function that returns the value of x used to compute Vt,k if t > 1, or k if t = 1. Then: xT = argmaxx∈S(VT , x) x(t− 1) = Ptr(xt, t) 2.3.2. Viterbi algorithm for sentence retrieval The decoding algorithm uses two metrics: the branch metric (BM) and the path metric (PM). The branch metric is a measure of the ”distance” between what was in input sequence and what was in the most similar part, and is defined for each arc in the trellis. When we are given a word sequence, the branch metric is the distance between the expected sequence and the given ones. The BM is defined on each transition as follow: 6 Viterbi algorithm for English-Vietnamese sentence retrieval in EBMT • If the word of transition and the word of input are identical, then the distance is 0. • If they are different words, but the identifications them are the same, then the distance is 1. • Otherwise, the distance is 2. The path metric is a value associated with a state in the trellis (i.e., a value associated with each node). It corresponds to the distance over the most likely path from the initial state to the current state in the trellis. By ”most likely”, we mean the path with smallest distance between the initial state and the current state, measured over all possible paths between the two states. The path with the smallest distance minimizes the total number of differences between two word sequences. Suppose the receiver has computed the path metric PM [s, i] for each state s at time step i. The value of PM [s, i] is the total distance when comparing the input word sequence to the most likely sequence, considering all word that could have been processed until time step i. Among all the possible states at time step i, the most likely state is the one with the smallest path metric. If there is more than one such state, they are all equally good possibilities. If the trellis is at state s at time step i + 1, then it must have been in only one of several possible states at time step i. For example, there are 2 predecessor states, labeled α and β, they are always the same for a given state. Any message sequence that leaves the trellis in state s at time i + 1 must have left the trellis in state α or state β at time i. For 2 predecessor states, we have the formula: PM [s, i+ 1] = min(PM [α, i] +BM [α→ s], PM [β, i] +BM [β → s]) The main loop of the algorithm consists of two main steps: first, calculating the branch metric for the next set of parity bits, and second, computing the path metric for the next column. The path metric computation may be thought of as an add-compare-select procedure: 1) Add the branch metric to the path metric for the old state. 2) Compare the sums for paths arriving at the new state (there are only two such paths to compare at each new state because there are only two incoming arcs from the previous column). 3) Select the path with the smallest value, breaking ties arbitrarily. This path corresponds to the one with fewest errors. 2.3.3. A word about complexity The time to extract a sentence depends mainly on K; as described, we need to process O(2K) transitions each word, so the time complexity is exponential inK . Moreover, as described, we can find the first word of the message only at the very end. 2.4. Simulation and result 2.4.1. Experimental condition We made manually an English-Vietnamese corpus including 6000 pairs of sentences. To evaluate translation quality, we employed subjective measure. Each translation result was graded into one of four ranks by bilingual human translator who is native speaker in Vietnamese. The four ranks were: • A: Perfect, no problem with both grammar and information. Translation quality is nearly equal to human translator. • B: Fair. The translation is easy to understand but some grammatical mistake or missing some trivial information. • C: Acceptable. The translation is broken but able to understand with effort. • D: Nonsense: Important information was translated incorrectly. 7 Minh Quang Nguyen The English - Vietnamese dictionary used includes 70,000 words. To optimize the processing time, a threshold is used to limit the result set of Example retrieval phase. Table 2 show the threshold we used to optimize example retrieval phase with sentence’s length smaller than 30. If length of input sentence is greater than or equal to 30, threshold is 8. 2.4.2. Performance For the experiment, we create two test sets: a test set of random sentence with complex grammatical structure and a set of 50 sentences edited from the training set. Under these conditions, the average processing time is less than 0.5 second for each providing each translation. Although the processing time increases as the corpus size increases, the increasing scale is not linear but about a half power of corpus size. Compare to DP-matching [1], the method used to retrieve example with word graph and A*Search achieves efficient processing. Using the threshold 0.2 with random sentences, where the time processing is significantly decreased, and the translation quality is low. The reason is that we used the bilingual corpus with size is too small. As a result, examples approached are not similar enough to the input sentence. There are two ways to increase translation quality. Firstly, we widen the size of example set. Secondly, since we have not any appropriate way to choose the right meaning from bilingual dictionary, we apply the context-based translation to EBMT system. The table 1 and the table 2 illustrate the evaluation of result. For the set of edited sentences (table 1), the system reached high translation quality with the Precision of 70%. Items in this set have grammar structure and word type similar to example set, this makes EBMT system find the suitable sentences to translate. For the set of random sentences (table 4), because it contains a number of complex sentences, so that the examples EBMT system reach is not similar enough to the input, consequently, the result has low quality (Only 50% sentences translated with quality rank of A or B, the rest is at rank C or D). System can translate sentences with complex grammatical structure as long as the example approached is similar enough to the input sentences. Table 1. Set of edited sentences and performance Rank A B C D Total 20 19 3 11 Average length of sentences 8.0 6.7 7.7 8.0 Table 2. Set of random sentences and performance Rank A B C D Total 13 10 3 30 Average length of sentences 5.7 5.6 6.0 8.0 3. Conclusion We report on a retrieval method for an EBMT system using edit-distance and evaluation of its performance using our corpus. In experiments for performance evaluation, we used bilingual corpus comprising 6000 sentences from every field. The reasons cause some low quality translation is the small size of bilingual corpus. The EBMT system will provide a better performance when it runs into a specific field. For example, we use EBMT to translate the manuals of productions, or introductions in travel field. Experiment results show that the EBMT system 8 Viterbi algorithm for English-Vietnamese sentence retrieval in EBMT achieved feasible translation ability, and also achieved effort processing by using the proposed retrieval method. Acknowledgement. This research was supported by SPHN-13-277 project, Hanoi National University of Education. We thank our colleagues from Faculty of Information and Technology who provided insight and expertise that greatly assisted the research. REFERENCES [1] Example based machine translation. wikipedia.com. Accessed: 2010-09-30. [2] LR Bahl, J Cocke, F Jelinek, and J Raviv, 1974. 284 ieee transacttons on information theory, march 1974. [3] Timothy Baldwin and Hozumi Tanaka, 2001. Balancing up effciency and accuracy in translation retrieval. 8(2):19-37. [4] Lambros Cranias, Harris Papageorgiou, and Stelios Piperidis, 1997. Example retrieval from a translation memory. Natural Language Engineering, 3(04):255-277. [5] Emmanuel Planas and Osamu Furuse, 1999. Formalizing translation memories. In Machine Translation Summit VII, pages 331-339. [6] NguyenMinh Quang and Tran Dang Hung. Using example-based machine translation for english-vietnamese translation. [7] Satoshi Sato, 1992. Ctm: An example-based translation aid system. In Proceedings of the 14th conference on Computational linguistics, Volume 4, pages 1259-1263. Association for Computational Linguistics. [8] Harold Somers, 2003. Computers and translation: a translator’s guide. John Benjamins Publishing, Volume 35. [9] Eiichiro Sumita, 2001. Example-based machine translation using dp-matching between word sequences. In Proceedings of the workshop on Data-driven methods in machine translation-Volume 14, pages 1-8. Association for Computational Linguistics. 9

File đính kèm:

viterbi_algorithm_for_english_vietnamese_sentence_retrieval.pdf

viterbi_algorithm_for_english_vietnamese_sentence_retrieval.pdf